Building trust in the security of AI systems

Base on my presentation at COSAC 2023, Ireland

AI systems require fundamental changes

Experience means that very often you observe that new systems come with the same old security issues in another disguise. It is tempting to think the same about AI systems: the classic CIA can be used, you have IAM to take care of, there are data protection issues etc. Nothing new under the sun. Yet, even for the common items on the security list, AI systems are not vanilla IT systems and the issues may require changes in the controls. You need to tread carefully and keep the peculiarities of AI systems in mind.

The difference with past experience is we are confronted with new questions on attributes like reliability, and testability. Reliability was mainly related to software or hardware bugs and testability risks grew with the complexity of systems. Business as usual. Not anymore.

Consistency was considered sort of obvious, given the deterministic nature of It systems: same input, same output. Explainability was founded in the design of an IT system, yet, it is not readily available in AI systems. Ethic aspects need to be checked at run time, whereas it used to be investigated at the very start of new IT systems.

For both the changed "old stuff" and the "new stuff" we must look at how to assure our stakeholders that we control the risks, that security is fine. Or not (yet).

Very short introduction to AI

The working of the AI systems can be simplified in 3 steps:

- collect massive amounts of input data relevant for the generation the AI system must perform, and reduce it to basic nodes.

- Create a multi-layer graph with weighted connections based on probability of occurring together in context of other nodes

- Answers are best/good paths in this huge graph connecting the dots formed by the prompt message(s)

Despite the name AI, no intelligence is involved in any of these steps. The graph is a representation of the captured knowledge, agnostic to what it represents. Build on massive amount of data, AI systems demonstrate impressive knowledge of "facts", and look smart.

Security: business as usual, new answers to be found

If we look at AI systems from the point of view of security, they share many concerns with other IT systems. It is mostly old stuff in new wrapping.

We care about data quality, integrity and reliability

- The source data matters, and may have undesired properties: errors, hidden bias, tainted content etc.

- The graph may overemphasize some relations, or may be based on unexpected correlations on marginal information with no true relevance

Conclusion: we may have difficulties demonstrating data quality, integrity and reliability of the generated items

We care about privacy and security

- We should avoid re-identification after anonymization

- We must observe data expiration agreements

- We must comply with agreed usage restrictions

- We must not violate IPR

- We must observe the access control requirements

Conclusion: it is nearly impossible to guarantee any of these requirements

We want accountability for data usage

- The accountable for the generated result is the AI owner, which is probably not the data owner or custodian or … Just the guy running the AI.

- The derived model provides more insight in the data, making it possibly and likely that prompts result in answers with a higher classification than the input data.

Conclusion: How do you convince the data officer that the data security is all fine?

AI-specific challenges

Next to the common items, we have specific things to take care of regarding AI systems.

Ethics

- IT systems:

Ethics in IT systems are mostly hardcoded as defined in the reference material, not something taken care of at run time. - There are ethics concerns with AI systems at runtime.

- The data are the data, yet, they may have hidden gems and bad surprises that can be revealed through prompts instead of predefined queries. The organization may have had (or is having) hidden biases, without being truly aware of them.

- Given the importance of lots of data, minorities may be treated as less relevant for the best answer (power by numbers)

Consistency

- IT systems are mostly deterministic and therefor consistent

- AI systems may vary their answers on purpose, or after adding more data in the learning phase.

Factuality

- IT systems present only facts (or so we believe), whenever the data quality is good

- AI systems

- AI systems generate compatible answers but may fill in some details with correctly typed data, yet fictional values.

- Answers are a mix of inputs, so there may be some contamination with somewhat related but deviating information

Causes for malfunctioning of AI systems

At the source

The old saying garbage-in, garbage out goes for the heavily data dependent AI learning phases too.

Data sources

- The quality of the data on which systems are build in determining the quality of the results

- Data sources specific for a company are likely classified as confidential or higher, and must be treated accordingly

Data integration

- Data is coming from different sources, using different formats (mmddyyyy vs. ddmmyy for instance) increasing the risk of mistakes

- Data representation may be regionally different ("evening" is not a precise name)

- A different metric system makes a difference (mile vs. kilometer, gallon vs. liter)

Statistics has produced scientifical methods to detect outliers, to compute reliable probabilities based on type of distribution and things like standard deviations.

- AI systems have no such underpinned measures

- Hidden impacting factors and confounding variables matter - how does an AI deal with such misleading cases?

Build-in error rate

Given the nature of AI systems as idiotic savants that memorized zillions of data about everything, there are new concerns. To be able to trust the output of an AI we cannot rely on the input data, nor on the result of the processing, nor on the generation.

Building trust

Testability and corrections

One common way to increase confidence in systems is testing. For critical systems the goal is to have covering tests, making sure all code has been executed at least once and all results are correct. The problem with AI is that the potential bugs are not in the code. The process is data driven so errors hide in the massive amount of data …

Worse, even if you would find out the data that is (co)responsible for a bad answer, what corrections to the model are required that do not break things elsewhere?

Explainability

If the system can explain how it came to the result in terms that are either clear or can be checked too, trust in the system increases a lot. However, if the way to implement it is prompting "please explain", it is just another query, not necessarily a true analysis of the previous one.

Transparency

Transparency would help to overcome the black box (or magic box?) feeling of AI systems. For queries based on a search engine, you select resulting links based on trust in the origin and how close the key topics seem to match your question. The way the model is constructed does not make this easy for AI.

Consistency

We are used to consistency when dealing with IT systems: the same usage leads to the same results, barring minor irrelevant changes in representing the results. AI systems use limited randomness to mimic humans: if the first answer does not seem to please, provide another angle on the same question. By following alternative paths in the graph with links with very similar strength a different answer is given. This is not the same as giving more details or refining the answer. Experiencing such "change of mind" undermines trust in a system.

There are different variants of consistency between answer and explanation:

- Factual consistency: Are the given facts consistent?

- Logical consistency: Is the same reasoning and flow in both?

- Clarity and comprehensibility: Is the clarity of the explanation good? Is the explanation complementary to the decision are just rephrasing it?

- Alignment with guidelines: Are there clear guidelines for the formulation of both to be followed?

It depends on the context which of these are the most relevant.

Metrics for decision making AI systems

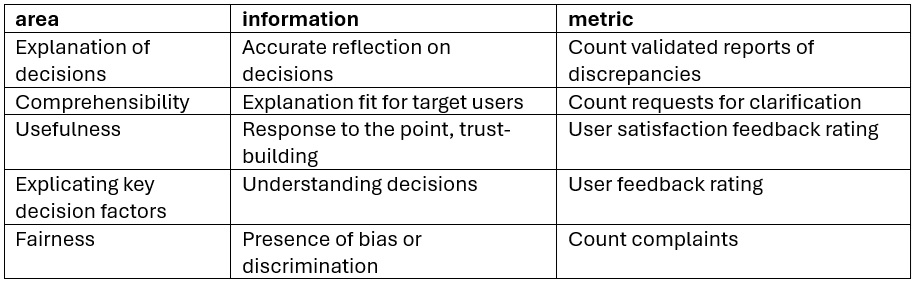

Having metrics to judge AI decision making systems are a great thing. Wishful thinking would allow to obtain the following set of metrics to start with:

Conclusion

Humans has been trying to understand what human intelligence is. Computers have played a major role in challenging attempts to pinpoint it. In a new incarnation of the question, humans ask themselves: when are computers intelligent? In both cases the temptation to equalize intelligence with intelligent behavior is strong. As an example, the Turing test using the metric that if a computer is indistinguishable from a human party in a conversation (so: behavior), that computer must be intelligent.

It should be clear that an AI system is not intelligent. It is a great statistics-like data processor, with impressive behavior for many tasks. Just like with other forms of statistics, errors come for free with it. Sometimes that is acceptable, sometimes not. Sometimes you can compensate for mistakes, sometimes not.

Security must find a way to establish risks and define controls to allow AI system usage in a responsible way. It will not be simply business as usual.